I’ve covered quite a bit of different voice cloning and deepfakes recently and it had me thinking, how much audio is really needed? Is a single voicemail worth of data enough?

My original thought for this was: could a malicious actor spend enough time on the phone with someone, recording their voice, to be able to imitate and impersonate a person’s voice? If I can do it with a voicemail, I can definitely do it with a phone call.

Quite a common attack surface at the moment is attackers calling up service desks pretending to be an employee and asking for a password reset or for credentials. Most recently, with M&S and their recent cyber attack: https://www.independent.co.uk/news/business/m-s-coop-hack-scattered-spider-it-worker-b2745218.html. It’s worth noting this attack was successful without voice impersonation; however, familiarity is one of the most common ways malicious actors increase the success of their attacks.

Normally, and in any good helpdesk / MSP, there should be a method to verify approval however, some helpdesks have been very lax with this. Furthermore, if I were to call up impersonating a CEO or someone of VIP status in a company, would an employee question me?

We see it on a regular basis with new starters being targeted with CEO impersonation. This typically stems from the fact a new starter will post on LinkedIn about a job. Attackers will then target the new employee, who may be more susceptible to an attack.

Let’s begin with a single voicemail:

Step 1 – Get More Data (Even from a 10s Voicemail)

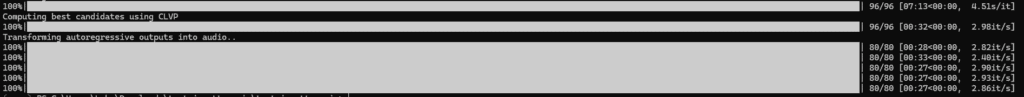

Now, if you only have 10-15 seconds of audio, how do you get more data? Well, we need to start by using the audio we have to create more. We can do this by using a Text To Speech generator (Tortoise-TTS) and feeding our voicemail into it as the baseline and then we can get it to create lots of different short audio clips. We also need to ensure the voice doesn’t sound emotionless. If we are to trying to truely clone a voice, we need to ensure it has different levels of pitch, rhythm and inflection.

Let’s run the tool to generate five (5) audio clips all saying “Hello, My name is Bleekseeks and this is a voice cloning demonstration.” All using the voicemail as the baseline.

Done! We now have five (5) different audio clips, all saying the same thing and the best thing in is, they all have different tones, emotion, mood, or expression. It will also apply the following:

- Pitch Slight shifts in vocal pitch (higher/lower).

- Rhythm Variation in pacing or pauses between words.

- Inflection Emphasis on different syllables or words.

- Energy One may sound more enthusiastic, another calmer.

Below are the audio clips generated from the single voicemail and the text-to-speech (TTS) prompt.

Audo clip 1:

Audo clip 2:

Audo clip 3:

Audo clip 4:

Audo clip 5:

Hopefully, you can hear some subtle differences. We have now created synthaised audio clips with the original voicemail voice.

Next we need to do this for multiple times to create more audio. The more data we have, the better the end product will be or as Sherlock Holmes put it:

Step 2 – “Data! Data! I can’t make bricks without clay“

I spent about two (2) hours crafting more audio files from the original audio file as the baseline. All different sentences, all different pitches and emotion. For most of this, I just used ChatGPT to generate random sentences that I could then feed into Tortoise-TTS. This would generate 5x audio clips each time and then we can rinse and repeat the process!

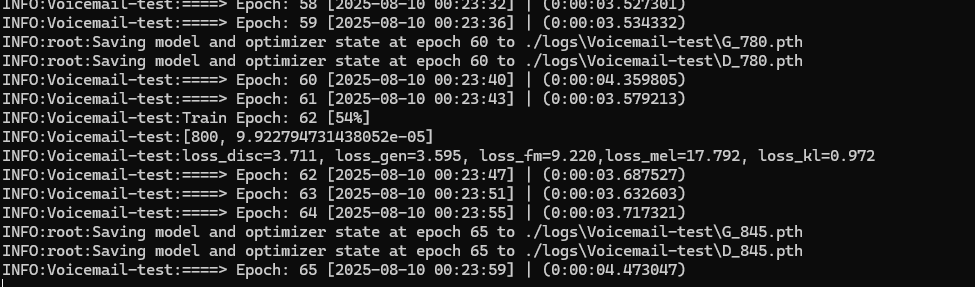

Step 3- Training AI

I now have about 20 clips in general, all generated from that original voicemail. In total, about 4 minutes of worth of audio for AI. That’s very little to train and AI model but I am really trying to push the boundaries of minimum needed rather than maximum or even recommended amounts. My aim here is to determine if we have any resembelence of the origin voicemail message.

How well did AI do? Well, that’s for you to judge. I used the newly generated model to sing Coldplay – Viva La Vida. Enjoy!

Is it perfect? No. Is it pretty good considering we had 10-15 seconds of originating audio clip…? Yes!

Recap:

I used a 10-15 second voicemail. Then used that voicemail to establish a baseline in a Text-To-Speech tool to generate more audio clips. From here, I fed the AI training model, the original clip and all my synthesised clips to generate a model. I then used that model to sign coldplay. This whole process has taken about 3 hours, most of this time has been being idle and is dependant on hardware.

Conclusion and Caveats:

So some caveats. The training would be a lot easier if my graphics card played ball… Unfortunately, as I am using a 50xx series graphics card, cuda / pytorch does not work. This means I had to use Tortoise-TTS on my CPU which significantly increases the time to generate audio.

Secondly, I am cloning a monotone, clear audio clip to begin with. It’s emotionless to begin with and very clear audio. This could essentially increase how easy TTS and RVC plays nicely with the clip and increases the quality of the end result.

With only a few minutes of source audio, it’s possible to create something that not only resembles the original voice but can also adapt to new sentences, emotions, and contexts. The results aren’t flawless, but they’re convincing enough to succeed. Especially when paried with DeepFakes: https://bleekseeks.com/blog/deep-fakes-how-attackers-are-weaponising-real-time-face-swapping

How to clone a voice with AI?

The tools I used are the following and setup for these can be found here: https://bleekseeks.com/blog/how-to-clone-a-voice-with-ai

- tortoise-tts

- This will use a single audio file and generate several more from that file. Useful if you have very little audio to begin with.

- Retrieval-based-Voice-Conversion-WebUI

- This is a robust tool and can be used for many impersonation and cloning tasks however, I used the audio files generated by tortoise-tts to feed into the AI model.