Last year I covered DeepFake Voice impersonations and cloning https://bleekseeks.com/blog/how-malicious-actors-can-easily-impersonate-your-voice with the aim to show DeepFake videos in the future. Well, the future is now!

I think it’s safe to say that AI has massively improved over the last couple years and seems to be getting better by the day and it’s incredible how easy it has made some mundane tasks. However, allow me to be all ‘doom and gloom’ for a moment and talk about the one of the biggest risks of AI; DeepFakes.

Let’s begin by pulling out some key stats and figures to help:

Deep Fake attacks have increased by 2137% in the last three years!

Only 22% of financial institutions have implemented AI-based fraud prevention tools!

60% of consumers have encountered a deepfake video within the last year.

Human detection of deepfake images averages 62% accuracy.

To make matters worse, there are numerous, easy to use, and free DeepFake tools that are publically available. This reduces the skill & cost barrier and allows someone to impersonate CEOs, colleagues, family members etc if they choose to.

If there is money to be made, malicious actor(s) will use it.

Why seeing shouldn’t mean believing anymore.

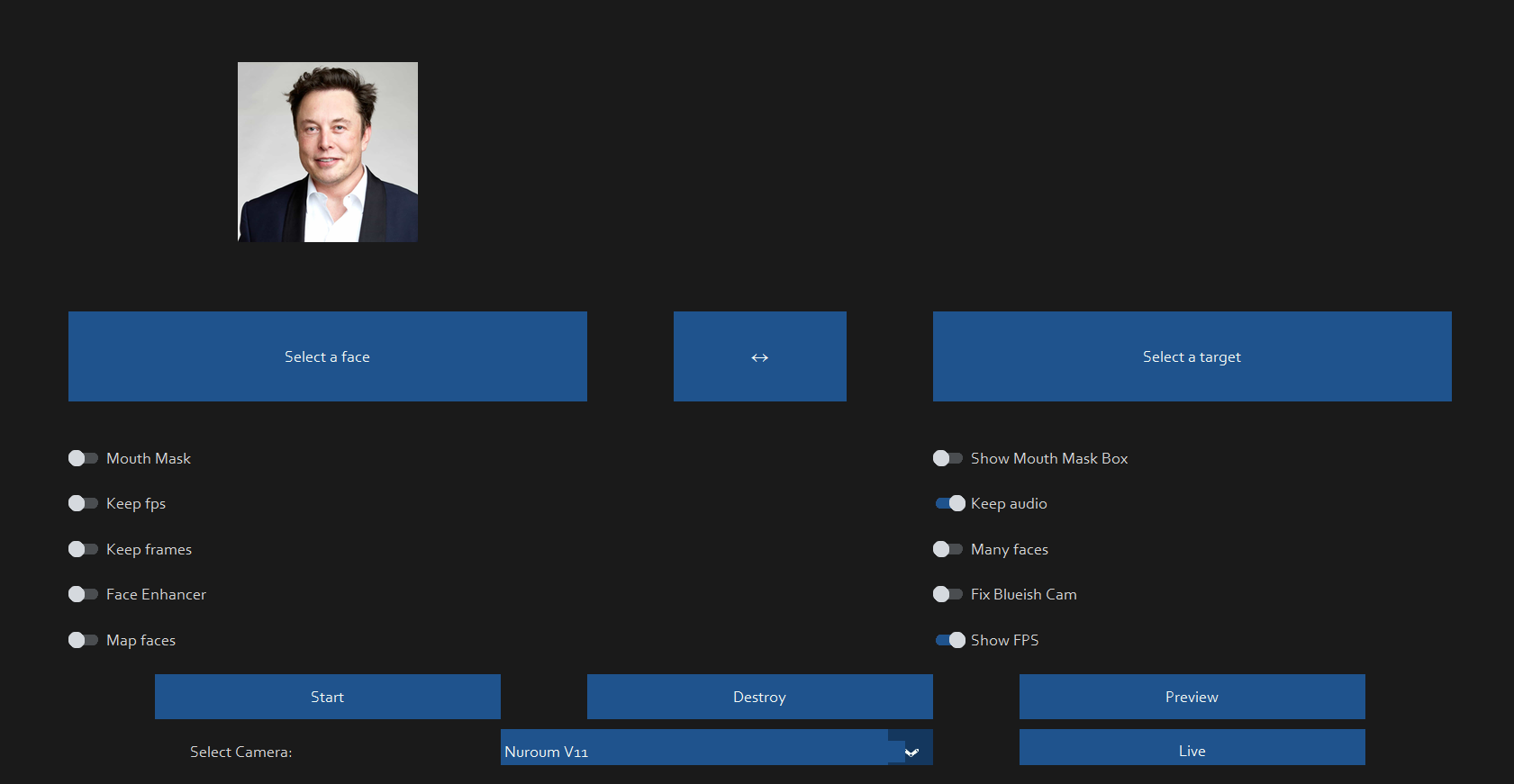

Hopefully, if I’ve done a decent enough job, you might just be able to make out Elon Musk in the video, mapped to my face. This is a live mapping can be used in real time on Microsoft Teams, Zoom and any other remote meeting software!

Results can depend on the quality of the camera, the picture used and facial features such as hairline, face structure, complexion etc however, the DeepFake tool I use can mask and blend most of the features to make it into a pretty close likeness. Alongside this, wearing a hat can often get around different hairstyles. For reference, I used a cheap camera with just 1.5x zoom.

It should further be noted that this tool also allows you to map a face to another face in a picture or a video. Effectively creating issues with photos and video evidence. Not my finest example but you get the point below… I swear it gets better.

So, how are attackers using deepfakes currently?

Let’s start with one of the more silly ones before we delve into the more deeper and darker reasons.

CASE Example 1: Romance Scams

What do the following all have in common?

Brad Pitt

Brad Pitt’s Mother

A French woman named Anne

€830,000

Well… they’re all related to a very successful romance scam. A French woman believed she was in a relationship with Brad Pitt and ended up being scammed out of €830,000. It began with a message from someone claiming to be Brad Pitt’s mother, stating Anne was exactly what her son needed. Soon after, ‘Brad Pitt’ himself reached out. They chatted and fell in love, or so she thought. This then formed trust, emotional investment and familiarity. Brad Pitt then claimed he was in hospital and needed gifts and money. Below are some of the deepfaked images sent by the scammers.

There were more than enough red flags that should’ve helped Anne realise something wasn’t right or at the very least, raised suspicion. But here’s the unfortunate reality for everyone reading this: you probably won’t get the same luxury of obvious mistakes and warning signs. AI is only getting better, more realistic, and far more convincing.

CASE Example 2: Cryptocurreny scams

If you’ve used Twitter / X , Facebook, or Youtube within the last year, you’ve probably seen one of these bogus cryptocurrency scams. Often used as a bit of a ‘pump & dump’ scheme. A ‘pump & dump’ scheme is a form of market manipulation where the price of an asset is artificially inflated through false or misleading information, only for insiders to sell at the peak before it crashes. They typically impersonate Elon Musk or Jeff Bezos as it helps bring ‘credibility’ and tricks victims into believing a very wealthy public figure is trying to do good and spread the wealth… Yeah right, like that would ever happen!

CASE Example 3: Sextortion & Blackmail

Sextortion scams have been around for decades. Usually, it’s a generic email claiming to have compromising footage via malware, demanding payment to keep it private. For those unaware it’s fake, the impact can be devastating. This has resulted in multiple cases of self-harm and even suicide.

Now layer in deepfakes. Attackers can generate fake but convincing images or videos with your face. It might not be flawless, but it’s often believable enough to scare someone into paying.

Catfishing is also evolving, fake identities backed by deepfaked video and audio are making social engineering more convincing and harder to spot.

CASE Example 4: Job interviews

Deepfakes have been used in job interview scenarios like the two below:

Fake Interviewers – Common in Nation-State Attacks (e.g. North Korea)

Attackers post fake job listings, schedule interviews, and present a deepfake of a legitimate recruiter. Once trust is established, they send malicious links or documents that lead to credential phishing or malware infection.

Sources:

https://www.hoganlovells.com/en/publications/north-korealinked-threat-actors-falsified-companies

Fake Candidates – Targeting Remote Roles

An attacker deepfakes their appearance to impersonate someone during a remote interview. If successful, they’re onboarded, given access to internal tools, VPNs, and accounts, opening the door for lateral movement, data theft, or ransomware deployment.

Sources:

How to stop / prevent Deep Fakes?

The wheels are already in motion and the momentum isn’t slowing. There’s no jumping off the train now. We need to adapt or get left behind.

Recommendations:

DeepFake detection tools (YotiID, Microsoft Video Authenticator, Intel’s FakeCatcher)

There are various AI detection tools out there that do a good job at detecting deepfakes. They’re not perfect but it’s an ever moving scale so that’s to be expected.

Training and Awareness

I’d imagine AI detection will be a bit like a mail filter, it will block lots of the rubbish but it definitely won’t get everything.

Cyber Security awareness is key! Spread employee and public awareness. Don’t trust content just because it’s on camera. Same way you shouldn’t blindly trust an email because it was sent by a trusted sender.

Digital Watermarking & Content Authentication

Still very early doors it seems for digital watermarking and signatures but frameworks like C2PA are designed to help track authenticity and changes

Authentication methods

Biometrics; providing good security practices are met such as liveness detection, which is designed to look for sign’s of live such as micro facial movements, lighting, eye tracking, blinking etc

Ensure it’s not just a basic 2D scan as these can easily be fooled. They’re less common these days but they’re still out there.

Good security practices

Be skeptical. Be cautious. Be vigilant. Ensure MFA is used, ensure best practies are met for biometrics, have good interview processes, implement zero trust etc.

Sources:

https://www.eftsure.com/statistics/deepfake-statistics/

https://www.dhs.gov/sites/default/files/publications/increasing_threats_of_deepfake_identities_0.pdf

https://www.hoganlovells.com/en/publications/north-korealinked-threat-actors-falsified-companies

https://www.iproov.com/blog/knowbe4-deepfake-wake-up-call-remote-hiring-security

https://blog.knowbe4.com/fbi-warns-of-deepfakes-used-to-apply-for-remote-jobs