In a world where technology continues to blur the lines between reality and fiction, cyber attacks will only increase and become more advanced to exploit this using the power of AI technology. It is becoming increasingly easy for malicious actor(s) to mimic and impersonate your voice or someone you know. This paired with Face AI makes it incredibly difficult to determine if the person you’re hearing and seeing is truly who they are.

Various methods and techniques can be employed to clone a voice, and over time, they’re becoming scarily accurate. The tool I will be using to conduct the tests can be found here: https://github.com/RVC-Project/Retrieval-based-Voice-Conversion-WebUI

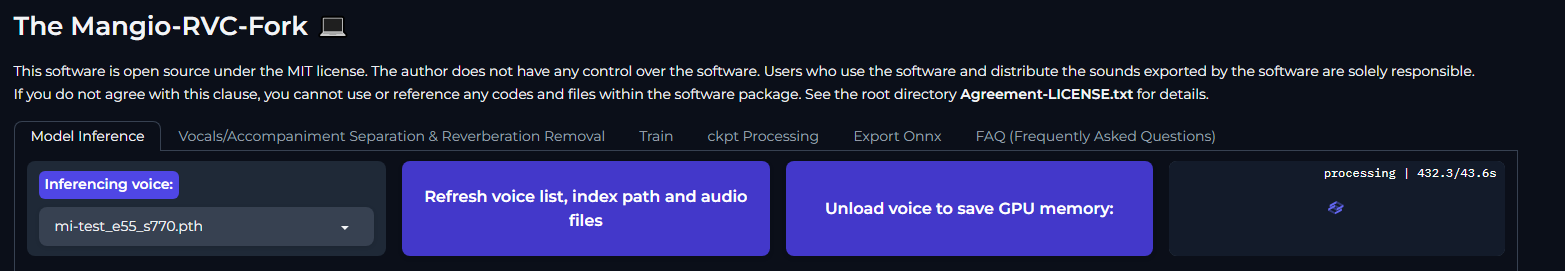

It’s free and has worked well for me during testing. It has multiple different features but the two most useful for our purposes are:

Model Inference

This feature allows you to upload .pth file (template file), you can then select an audio file, and it will run the template file against it, generating a new vocal version.

Train (Machine Learning AI)

To use this, you need to create a dataset. You can use one long clip or multiple smaller clips. Once created, it will create a .pth file (template file)

RVC stands for Retrieval-based-Voice-Conversion. RVC has multiple active communities, all downloading, recording and splicing to create perfect templates that others can use. Mostly, this is done as a joke – getting a famous figure such as Obama discussing something benign or using audio snippits of your favourite artist to sign a song in a completely different genre.

There are multiple different sites out to download these templates but the one I used was the following: https://voice-models.com/

I present to you, Johnny Cash singing Barbie Girl – forgive me please – also audio warning… It’s actually pretty good to be honest.

Now we have that out the way… Let’s focus on why this isn’t necessarily a good thing.

Imagine receiving a call from a colleague, friend, or loved one — can you be certain the person on the other end is truly who they claim to be? The answer is becoming increasingly elusive. Especially with contact spoofing and even machine learning Face AI.

The audio snippets required for these impersonations don’t necessarily have to be extensive however, the more audio clips used, the more accurate it will be. For example, if you’re in a Zoom or Microsoft Teams call, I reckon by the end of the call you should be able to create a pretty functional template of a presenter.

I have tested with a few audio clips of myself, totaling around 10 minutes and surprisingly, I managed to generate a somewhat convincing imitation of my own voice using the AI Machine Learning feature. The only real downside is computing power. If you want a high quality audio file, it will take ages! For my file, I selected 50 epochs which is on the lower end. You can have 10000 in total! But what is an Epoch and why does it matter?

An Epoch represents a complete pass of training data through the algorithm, serving as a crucial hyperparameter that shapes the machine learning model’s training process. It’s important to note that more Epochs don’t necessarily translate to better quality; there’s a point of diminishing returns where the quality plateaus or even decreases. If you provided 10 hours of audio files you would need to select a higher amount of Epochs but this would likely take days to render.

Once the AI machine learning model is trained, and you have a functional voice template, the next step involves running it against a desired audio clip. Taking the example of a Johnny Cash audio imitation, you’d start by gathering multiple clips of Johnny Cash singing. These clips would then be fed into the AI learning model, specifying the appropriate number of Epochs for processing. Following the completion of this training, you’d employ the newly acquired audio template to mimic a chosen audio file.

For example, I am currently using a 50 Epoch template against a 3 minute audio file and it has so far taking 35 minutes – and it’s still not done however, time can vary depending on the quality settings provided and the hardware, primarily the GPU.

![]()

Essentially, what I am trying to say is although it would take some effort, an impersonation of a voice can easily be achieved. Audio can be used from a voice mail, or a recorded meeting session or even a service provider recording their calls. Once enough audio has been captured, a malicious actor(s) would likely use this to conduct a social engineering attack.

If a close friend called and asked you to browse to a link or download a file, I suspect most of the general public would proceed without giving it a second thought. Now imagine a company director or spokesman, these roles are typically roles that have a good amount of public speaking and even publically avaliable recordings. Ascetain a couple of these and you’re in! A social engineering attack could them be conducted to an employer to trick them into performing a malicious action.

This is obviously an easier scenario giving their size and publicity but using the example below, the first three videos provide close to two hours of audio!

Although impersonating a voice is easy, it should be noted that unless you have an extremely monotone voice, voice mannerisms would be different and might not quite tally up. This is only useful if you know the person, but there are little telltale signs that are obvious if you listen carefully—unless a malicious actor(s) has an unprecedented amount of audio clips of your voice.